Research

This page provides the summary of my current research. Check Publications page for papers.

Theoretical Foundation for RL

Reinforcement learning (RL) has rapidly become one of the fastest-growing fields in machine learning. Over the past decade, RL applications have achieved major breakthroughs, such as defeating world champions in Go and StarCraft II. However, the use of RL in real-world problems remains limited. The main challenge is that most RL methods require interaction with an environment, which is often infeasible in practice due to high costs, and potential legal, ethical, or safety concerns. My research in RL foundation aims to develop efficient RL algorithms that can learn from offline data with low (optimal) sample and computation complexity. There are two main tasks in these two categories: Offline Policy Learning (OPL): learning the optimal strategy for sequential decision-making problems with the logged historical data; Offline Policy Evaluation (OPE): making the counterfactual prediction for the performance of an undeployed strategy using historical data.

Additionally, I also provide provable guarantees for online reinforcement learning (RL), where exploration is permitted, as well as for low adaptive RL, which bridges the gap between online and offline RL. I approach these problems from various perspectives, including posterior sampling, adversarial robustness, zero-sum games, and bandit formulations.

Practical Reinforcement Learning

To move from foundation to practice, I also study how to develop principled methodologies to make Reinforcement Learning effective for practical applications. My work has successfully applied RL algorithms to physics-based robotics simulators, computer games, and internet network management problems.

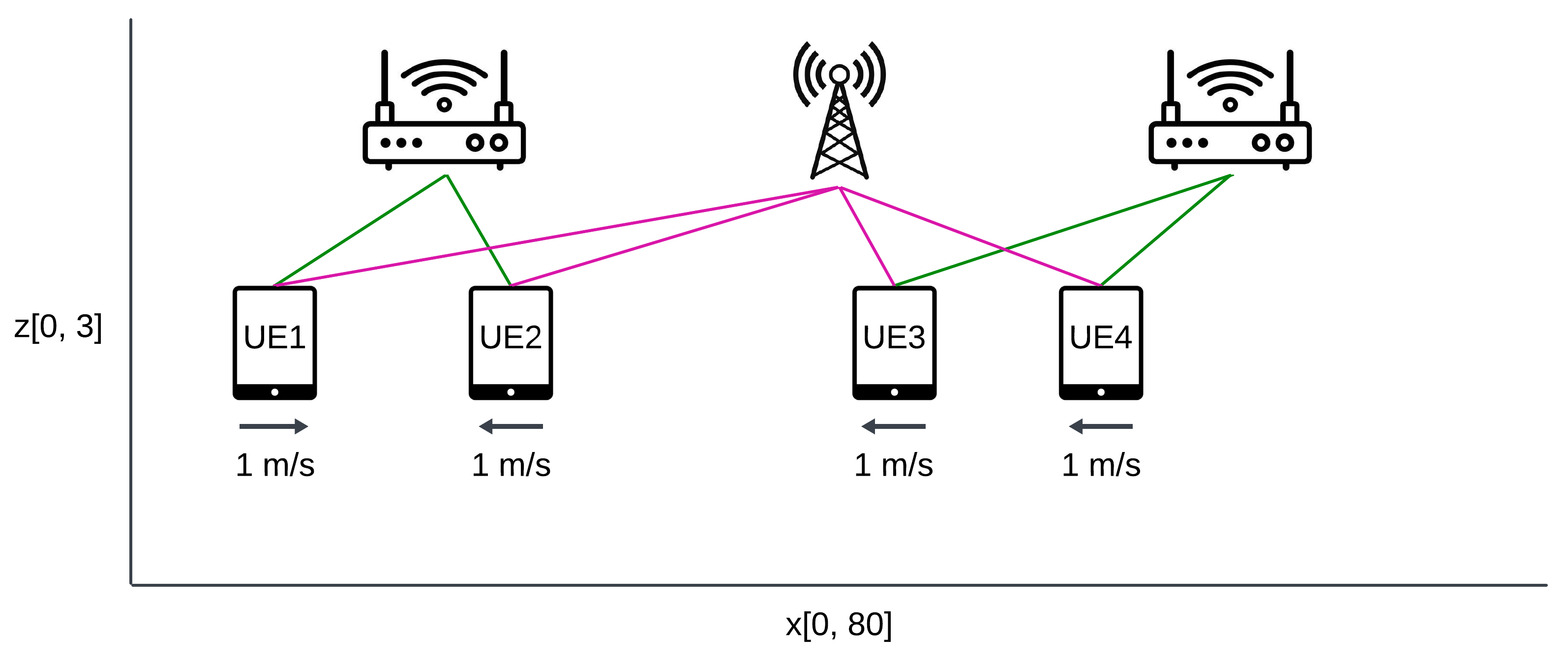

For multi-access network traffic management, our pessimism-based algorithm outperforms existing state-of-the-art deep RL methods in the high-fidelity NetworkGym environment, which simulates multiple network traffic flows and multi-access traffic splitting. In offline RL, we introduced the Closed-Form Policy Improvement Operator, which updates policies using a closed-form solution rather than traditional gradient descent, and it has proven competitive with leading RL methods in MuJoCo robotic simulators. Additionally, we reformulated the value function learning problem as a target network learning problem, yielding empirical improvements on Atari games.

LLM Alignment meets Sequential Decision-making: Theory and Practice

The Theoretical understanding of Large Language models lags far behind its empirical successes. I make an effort by studying speculative decoding, a popular decoding method that has been deployed in many real-world products and achieves a 2-2.5X LLM inference speedup while preserving the quality of the outputs. I conceptualize the decoding problem via Markov chain abstraction and study the theoretical characterization of the key properties, output quality, and inference acceleration. The analysis covers the theoretical limits of speculative decoding, batch algorithms, and output quality-inference acceleration tradeoffs. It uncovers fundamental connections within LLMs through total variation distances, showing how these components interact to impact the efficiency of decoding algorithms.

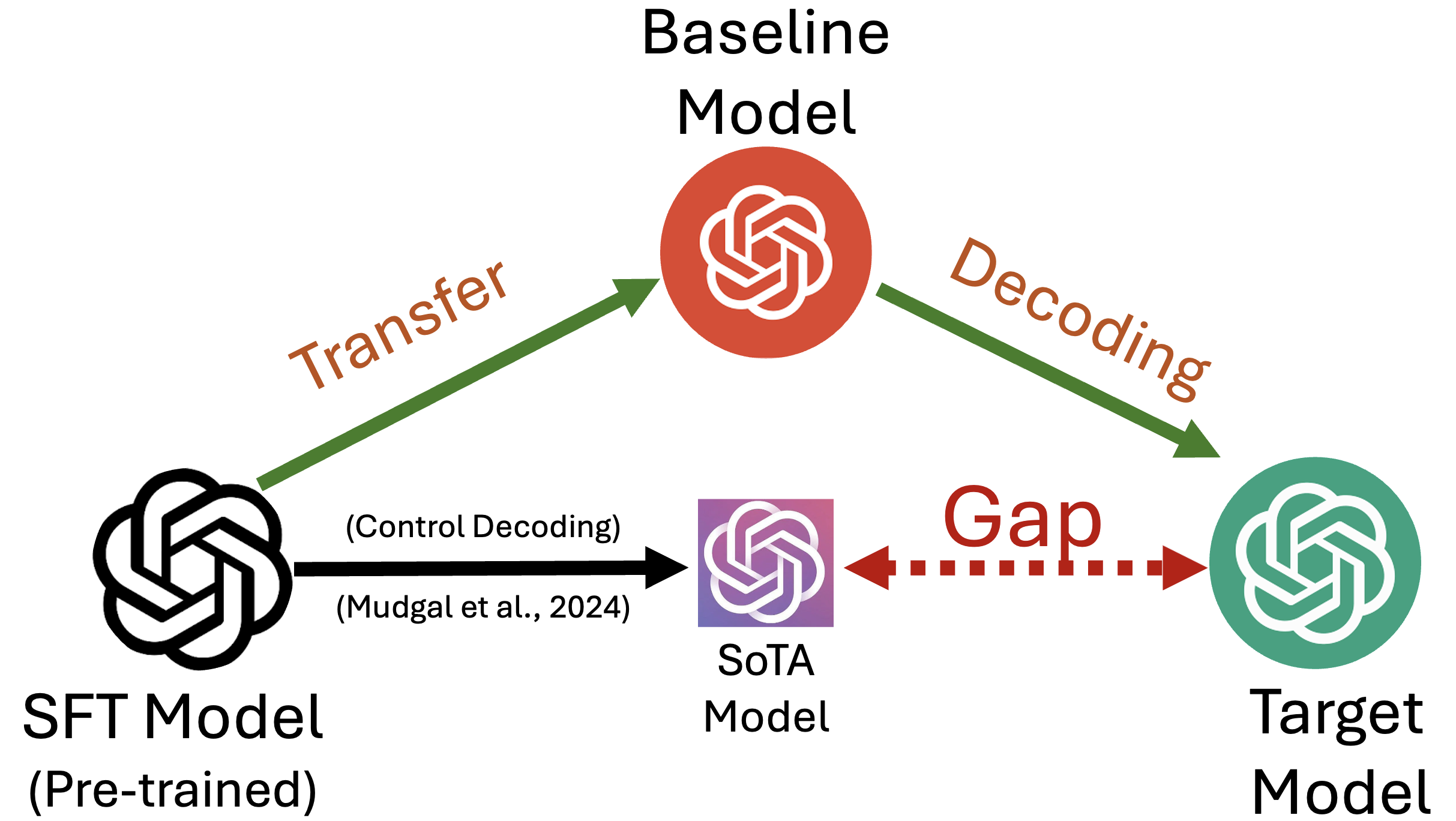

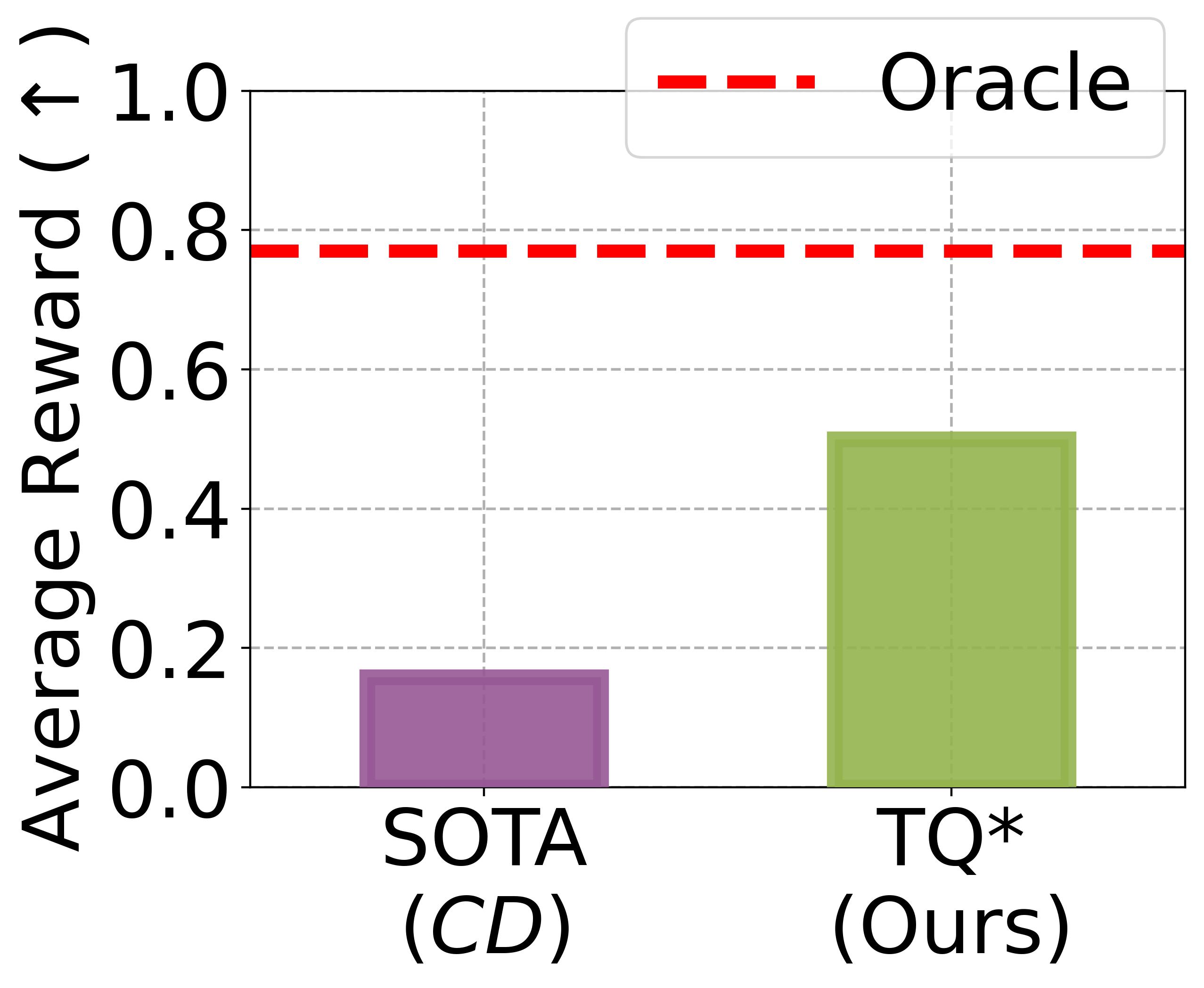

Alignment for large language models (LLMs) is essential to ensure that these models behave in ways that are safe, ethical, and aligned with human values and intentions. Given a reward model and an LLM to be aligned, alignment can be cast as a (sparse) Reinforcement Learning problem. Ideally, a principled decoding method would use the optimal Q* function, but this function is unknown. The prior method estimates Q* by Q-SFT, and our method estimates the correct Q* function via the Transfer procedure.

Evaluation & Benchmarks for Generative AI

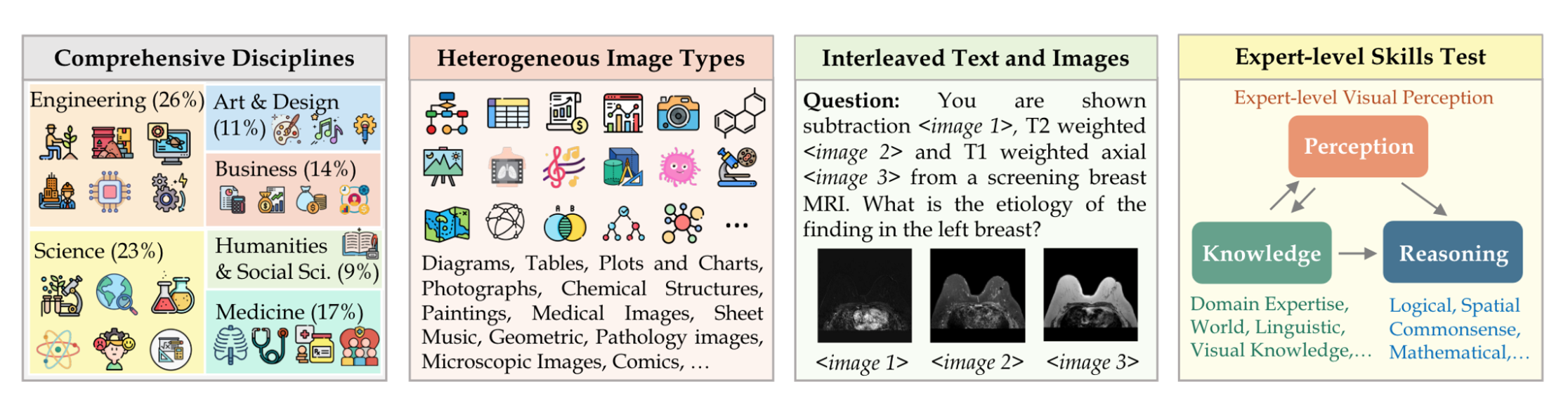

Measuring the performance of generative AI models is challenging since their outputs are subjective, open-ended, and lack clear ground truth, requiring evaluation across multiple dimensions such as relevance, coherence, and diversity. This becomes even more complex in multimodal evaluations, where tasks involve various formats such as images and text. In collaboration with other researchers, we created MMMU, a benchmark designed to evaluate expert-level multimodal understanding and reasoning across six diverse disciplines and over 30 subjects. My contribution: I contributed 400 carefully curated multimodal questions to a total of 11.5K questions in the benchmark.

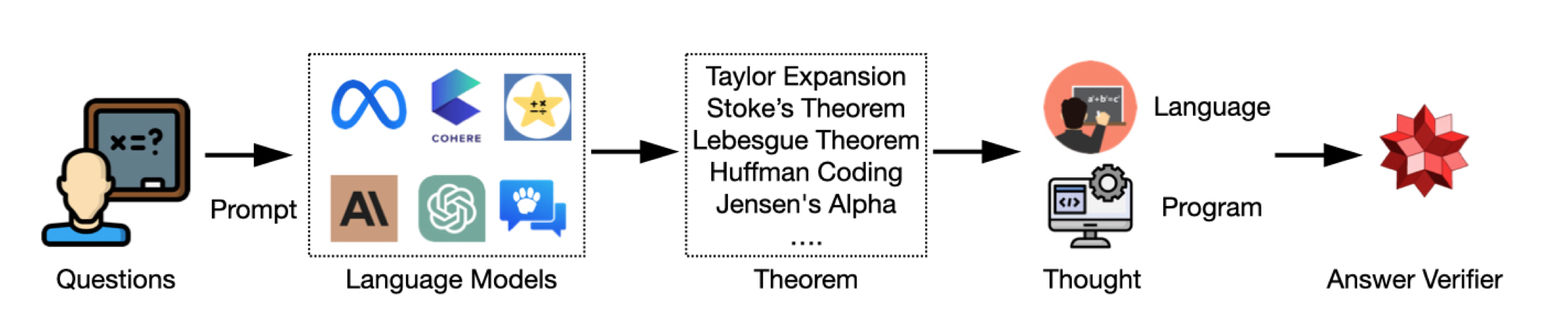

Recent large language models (LLMs), such as GPT-4, have made significant progress in solving fundamental math problems like those in GSM8K, achieving over 90% accuracy. However, their ability to tackle more complex problems that require domain-specific knowledge, such as applying theorems, remains underexplored. To address this, we developed TheoremQA, the first theorem-driven question-answering dataset aimed at evaluating AI models’ ability to apply theorems to solve advanced science problems. My contribution: I curated nearly 100 college-level math competition questions to a total of 800 questions in the dataset.

AI for Science & Engineering

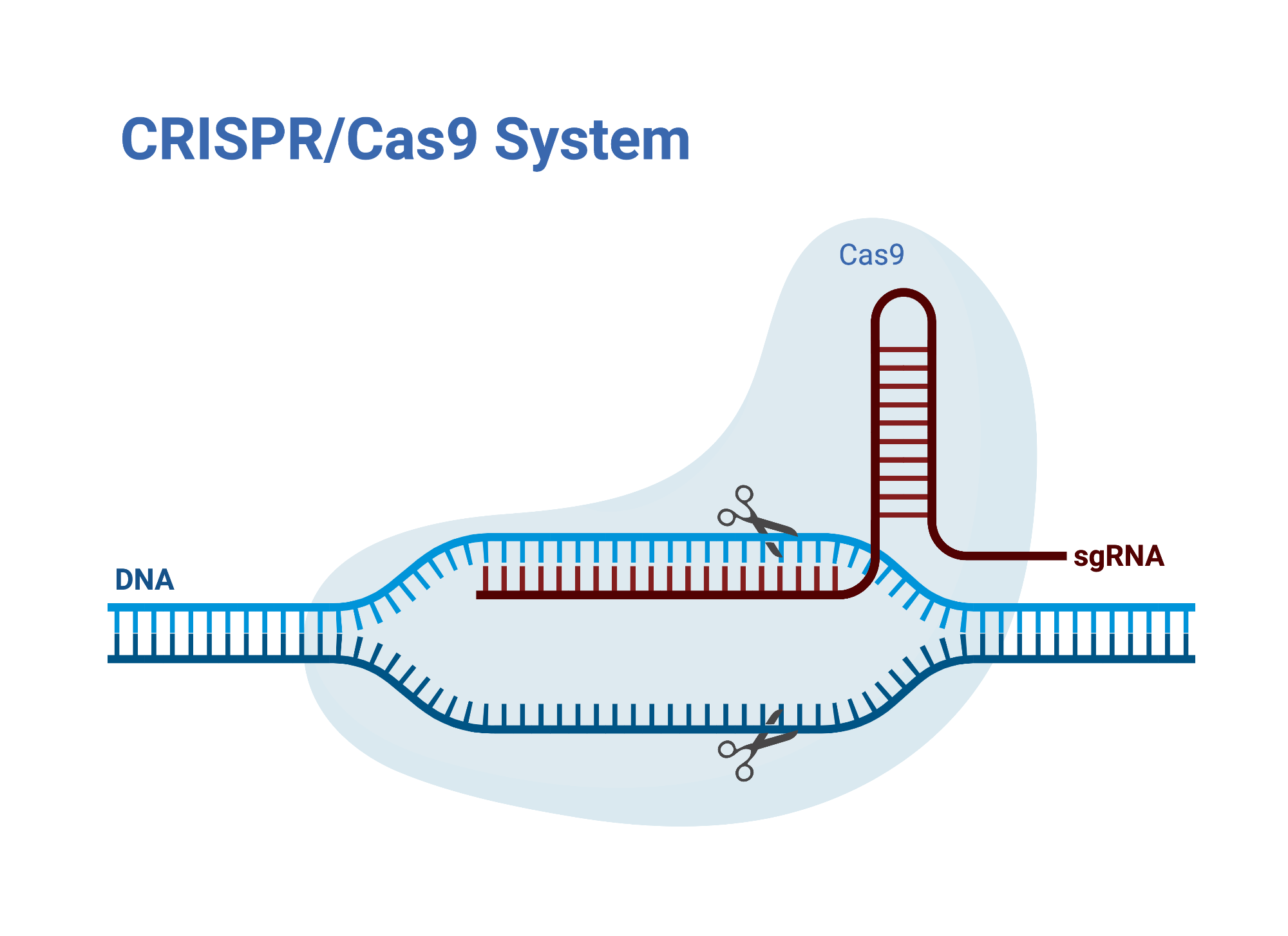

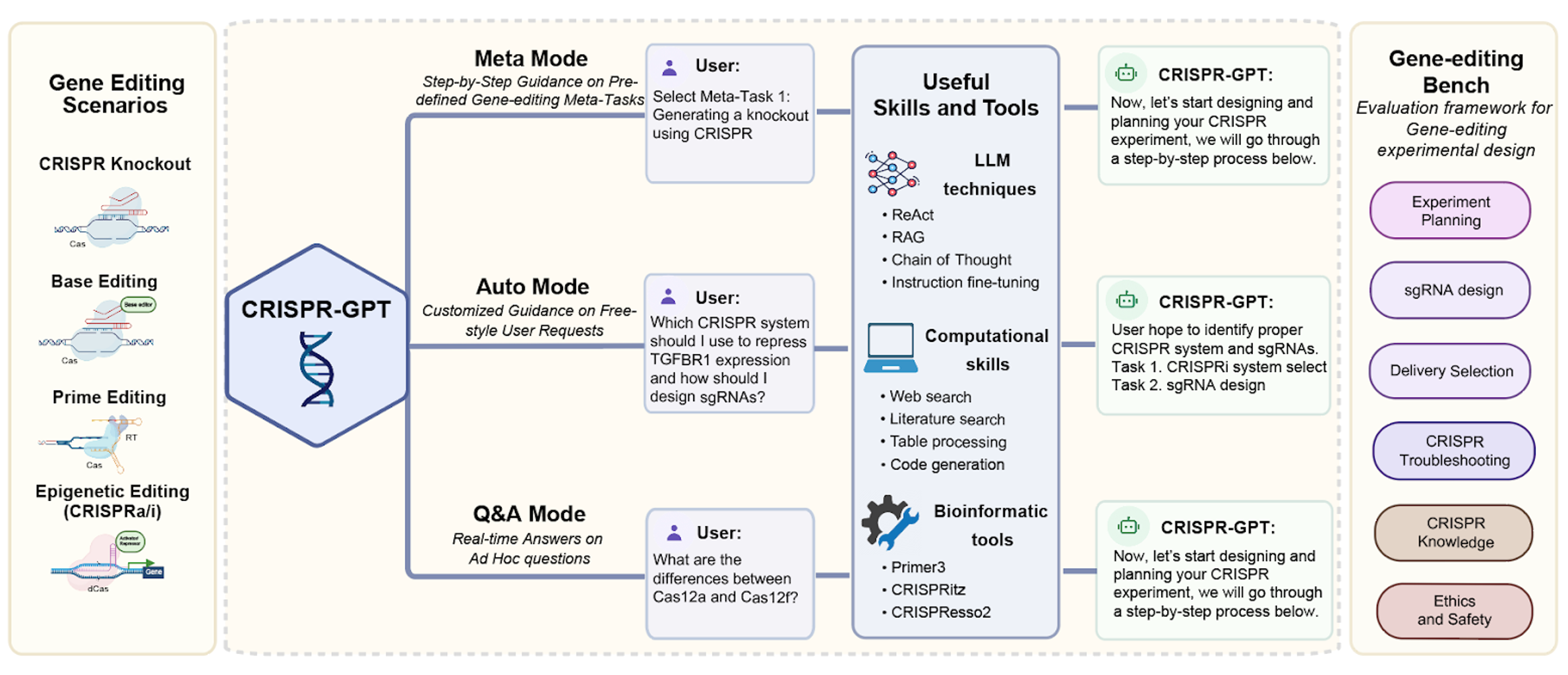

Genome engineering technology has revolutionized biomedical research by enabling precise genetic modifications. One exciting example is the CRISPR technology, which can be used to develop critical advances in patient care or even cure lifelong inherited diseases. This technology won the 2020 Nobel Prize in Chemistry. In assisting beginner researchers with gene-editing from scratch, we designed CRISPR-GPT, an LLM agent system to automate and enhance the CRISPR-based gene-editing design process. This system is driven by multi-agent collaboration, and it incorporates domain expertise, retrieval techniques, and external tools. I contributed to CRISPR-GPT by fine-tuning a specialized LLM with a decade’s worth of open-forum discussions among gene-editing scientists.